CarKar: In-Vehicle Gesture Control & Ambient Visualization System

Background

In modern vehicles, entertainment control often distracts the driver or is inaccessible to rear-seat passengers. Touchscreens require visual attention, which is a safety hazard for drivers. Our team identified a need to shift control to the passengers in a way that is intuitive and "eyes-free." We aimed to create a Digital Ecosystem where devices communicate across the vehicle to synchronize music control and ambient lighting.

Tasks

My primary goal was to push the boundaries of "music visualization" and seamless device communication.

The specific project objectives were:

Gesture Control: Create a sensor-based input system to replace physical buttons/screens.

Music Visualization: Engineer a system where lighting reacts dynamically to audio frequencies.

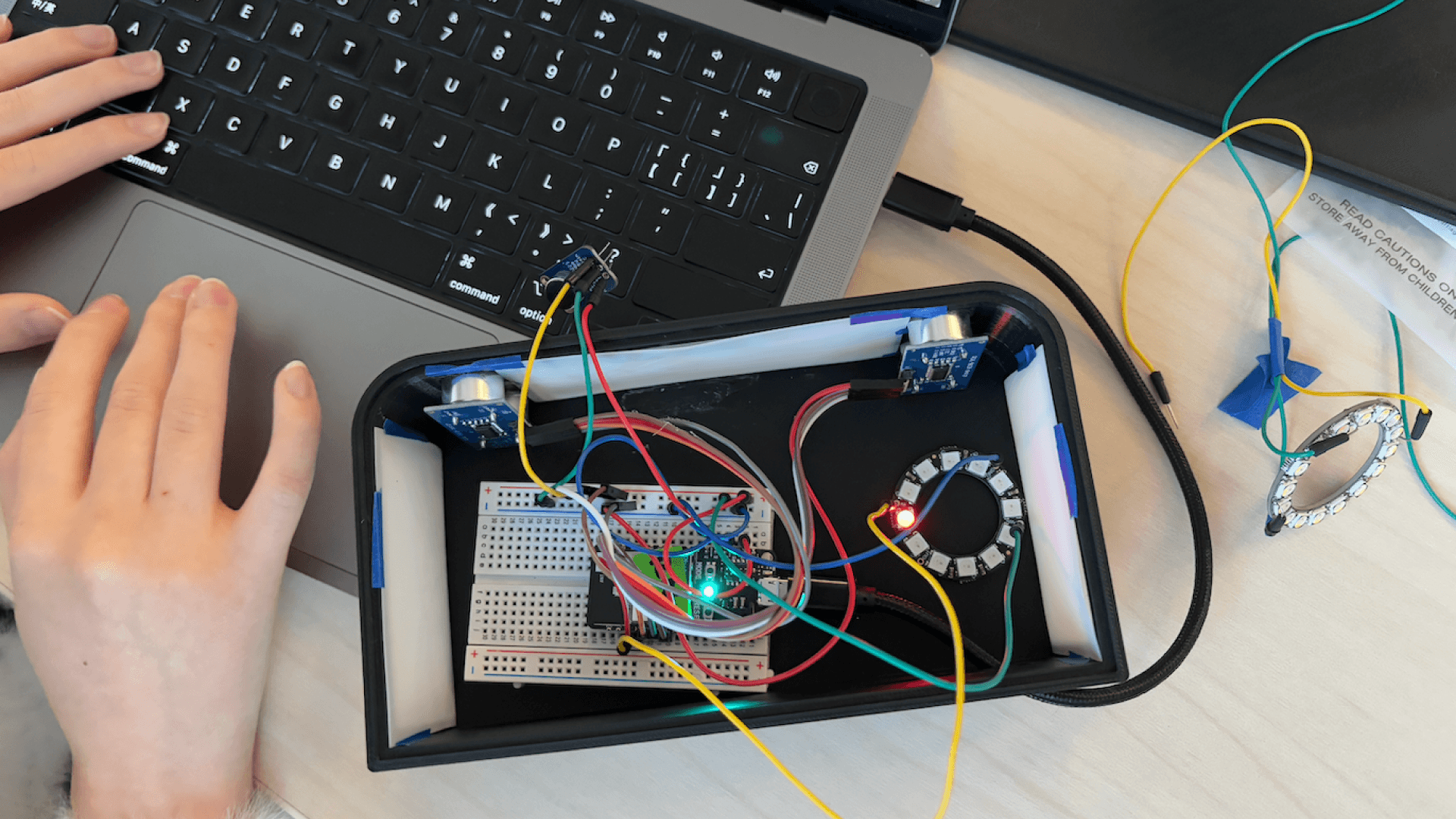

System Integration: Design the physical housing and manage power distribution for multiple components.

Actions

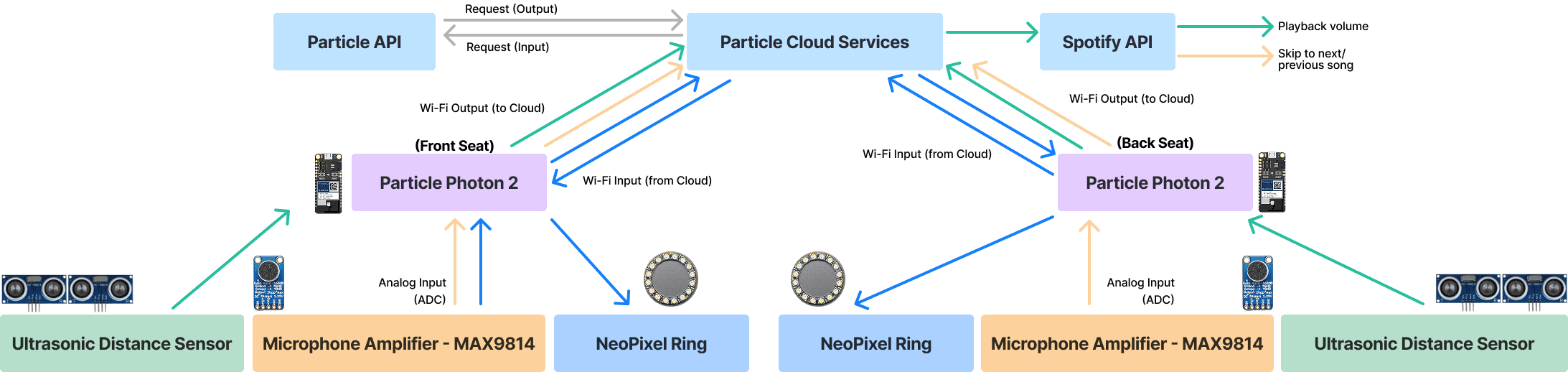

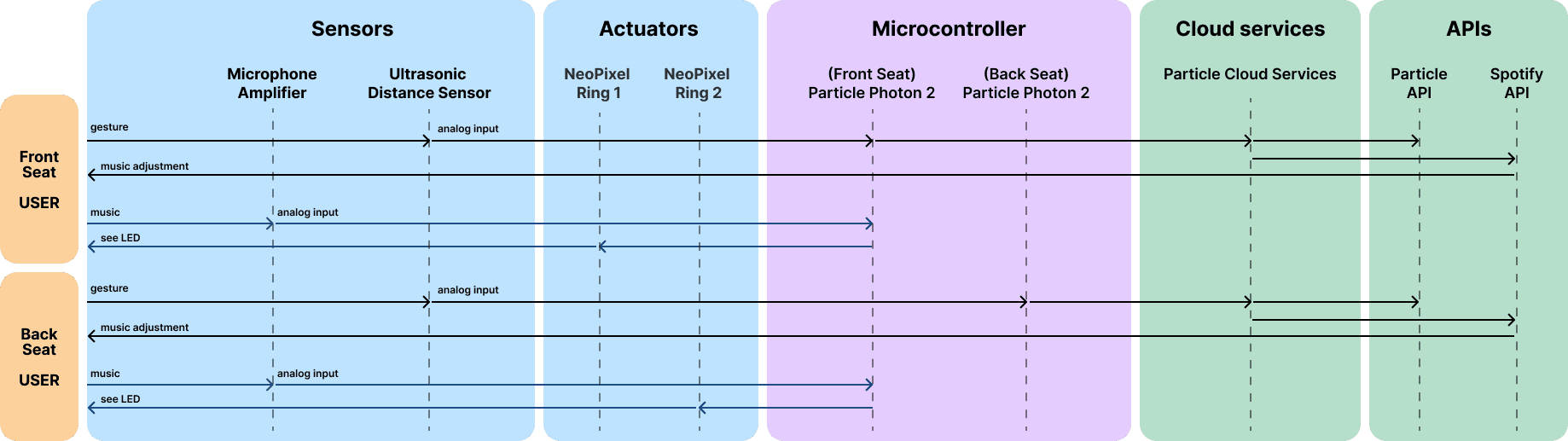

Architecture & Connectivity

We designed a dual-device architecture: one unit for the front seat and one for the back. I utilized Particle Cloud Services to enable these two devices to communicate wirelessly. When a user gestures at the back-seat device, it sends a signal to the cloud, which triggers the music control API and syncs the LED state on the front-seat device.

Interaction Design: Gestures

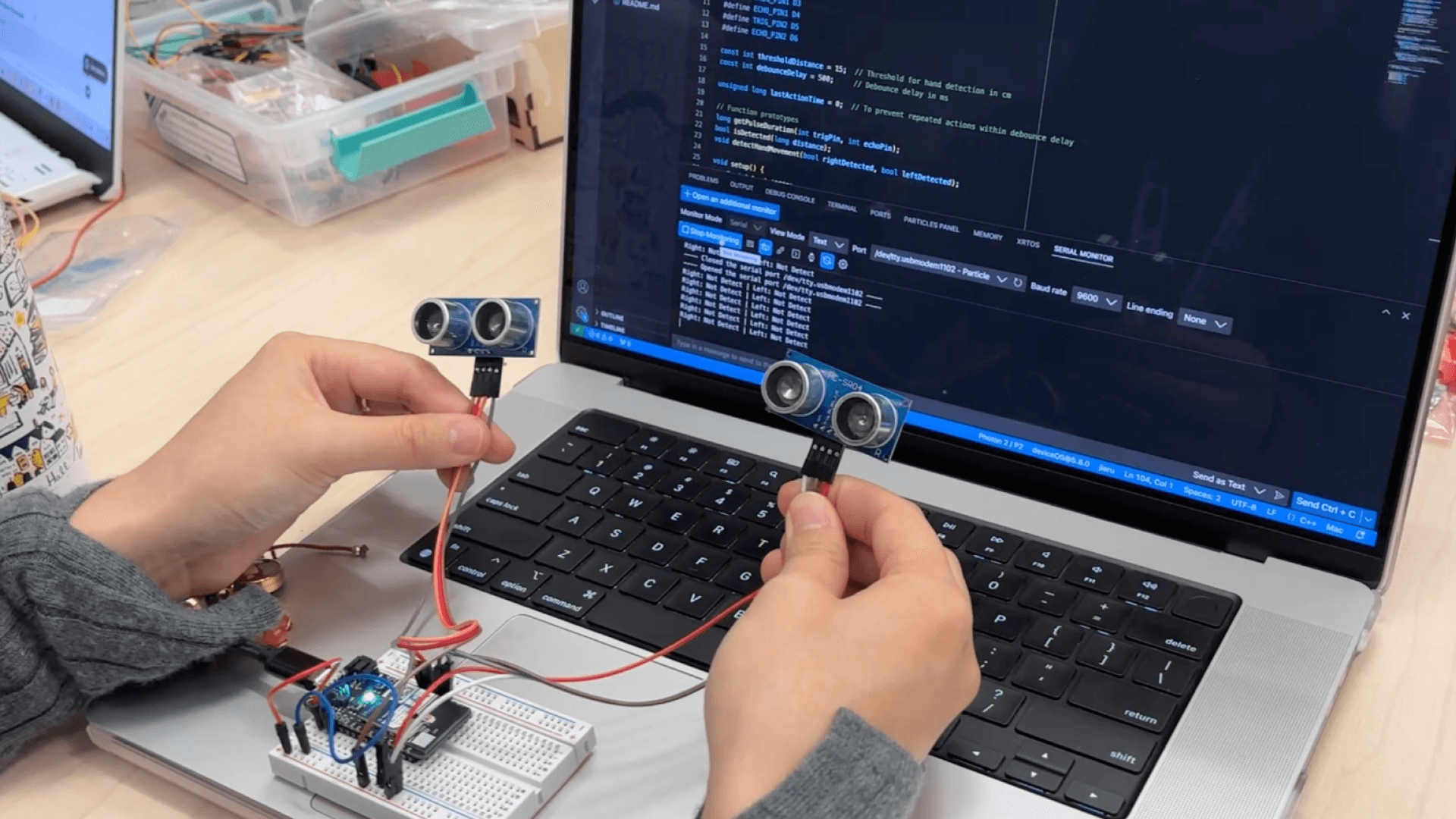

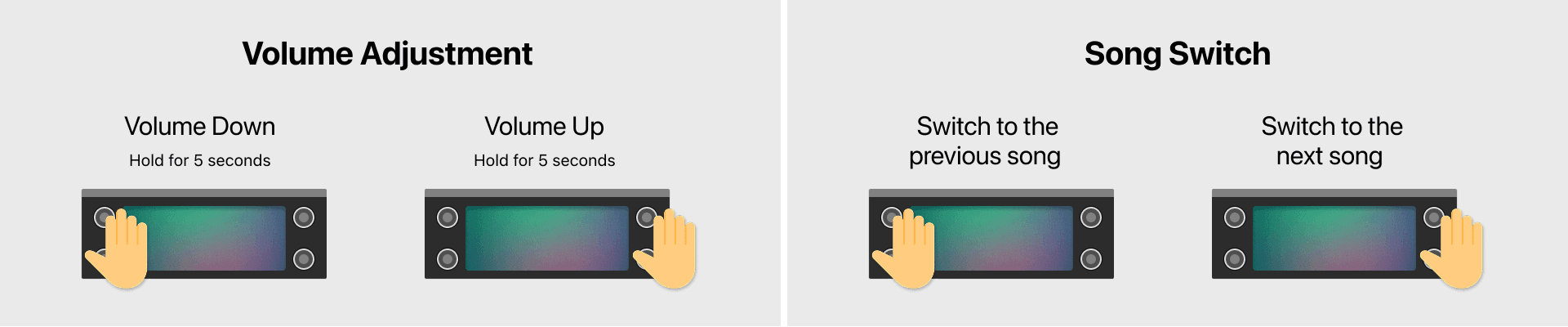

We implemented specific hand gestures using ultrasonic distance sensors to ensure intuitive control:

Volume Control: Holding a hand in front of the sensor for 5 seconds triggers a gradual volume adjustment.

Track Switching: A wave gesture allows users to skip to the next or previous song.

Visualizing Sound

I led the development of the "Music Dynamic Display." I wired a Microphone Amplifier to the Photon 2 to detect audio frequencies. I then wrote C++ code to translate these frequencies into lighting patterns on Neopixel Rings. This required solving a critical hardware challenge: the Photon 2 operates at 3.3V, while the Neopixels require 5V. I had to re-engineer the power distribution to ensure the LEDs functioned brightly and consistently without crashing the microcontroller.

Iterative Prototyping

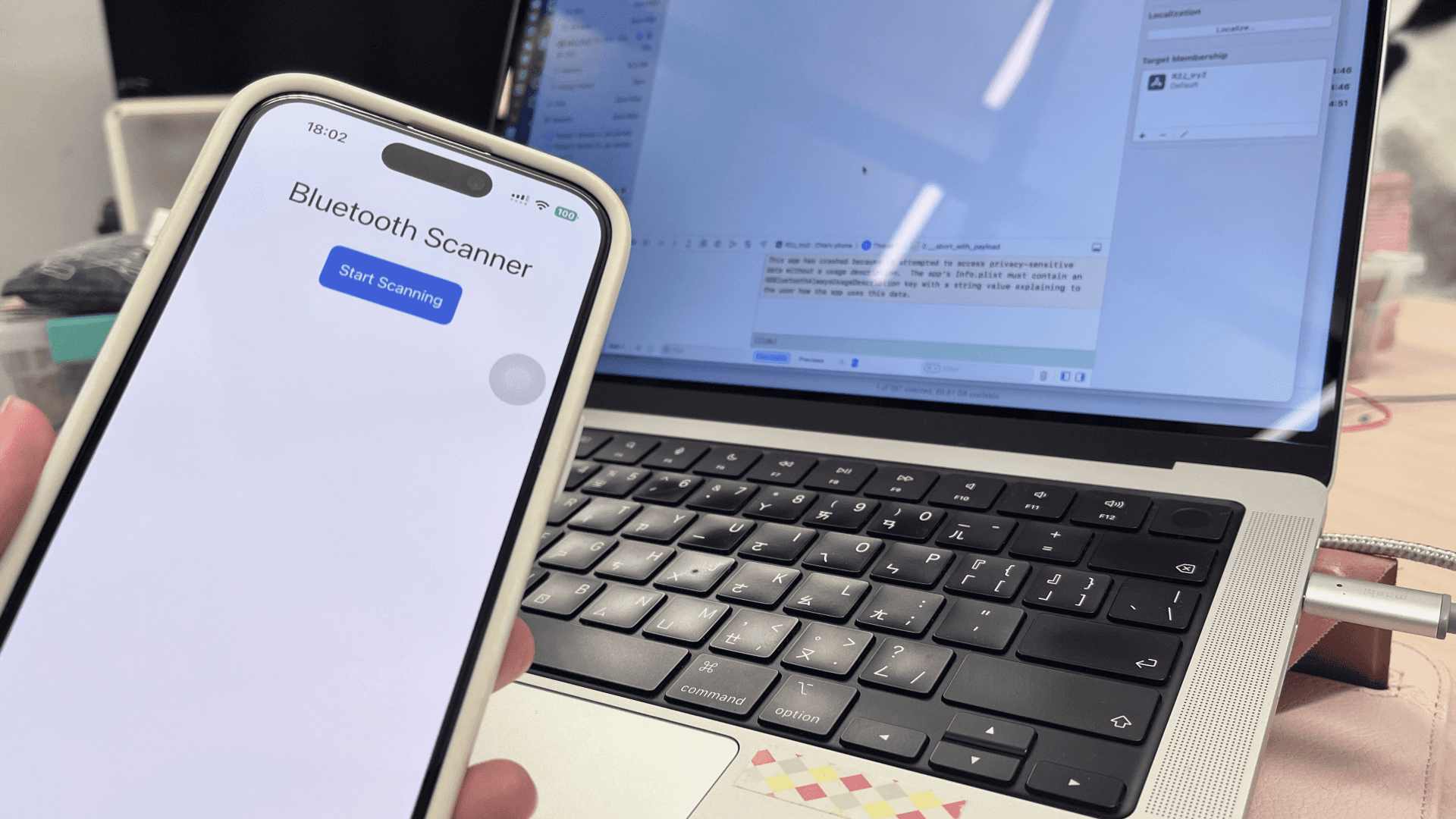

I initially attempted to build a custom iOS app to control the music via Bluetooth. However, I discovered strict iOS permission restrictions regarding Bluetooth control. Pivoting quickly, I shifted focus to solidifying the hardware build and 3D modeling the enclosures to ensure the electronics fit securely within a vehicle environment.

Outcome

The final prototype successfully demonstrated a functional in-car ecosystem:

Cloud Synchronization: Successful low-latency communication between front and rear units.

Immersive Ambience: The LED rings reacted in real-time to the beat of the music, enhancing the passenger experience.

Safety & Usability: The gesture system effectively removed the need for passengers to reach for the dashboard or distract the driver.

Reflection

Technical Constraints as Design Drivers

This project taught me that hardware constraints (like voltage differences and Bluetooth permissions) must be addressed early in the design process. The failure of the initial iOS app attempt was a valuable lesson in researching API limitations before development.

Systems Thinking

I learned to view the product not just as a standalone device, but as part of a larger "Anthropogenic Environment." By decentralizing control from the driver to the passengers, we altered the social dynamic of the car ride, making passengers active co-creators of the journey rather than passive observers.

Future Speculations

Moving forward, I envision integrating AI to analyze passenger behavior. For example, the system could detect if passengers are falling asleep and automatically dim the lights and lower the volume, creating a truly responsive and empathetic vehicle environment.